Other Dataset Options

Configure advanced data loading and storage behaviors

Beyond the basic dataset configuration, Scoop provides advanced options that control how data is loaded, stored, and processed. These options help optimize performance for large datasets and support specialized use cases.

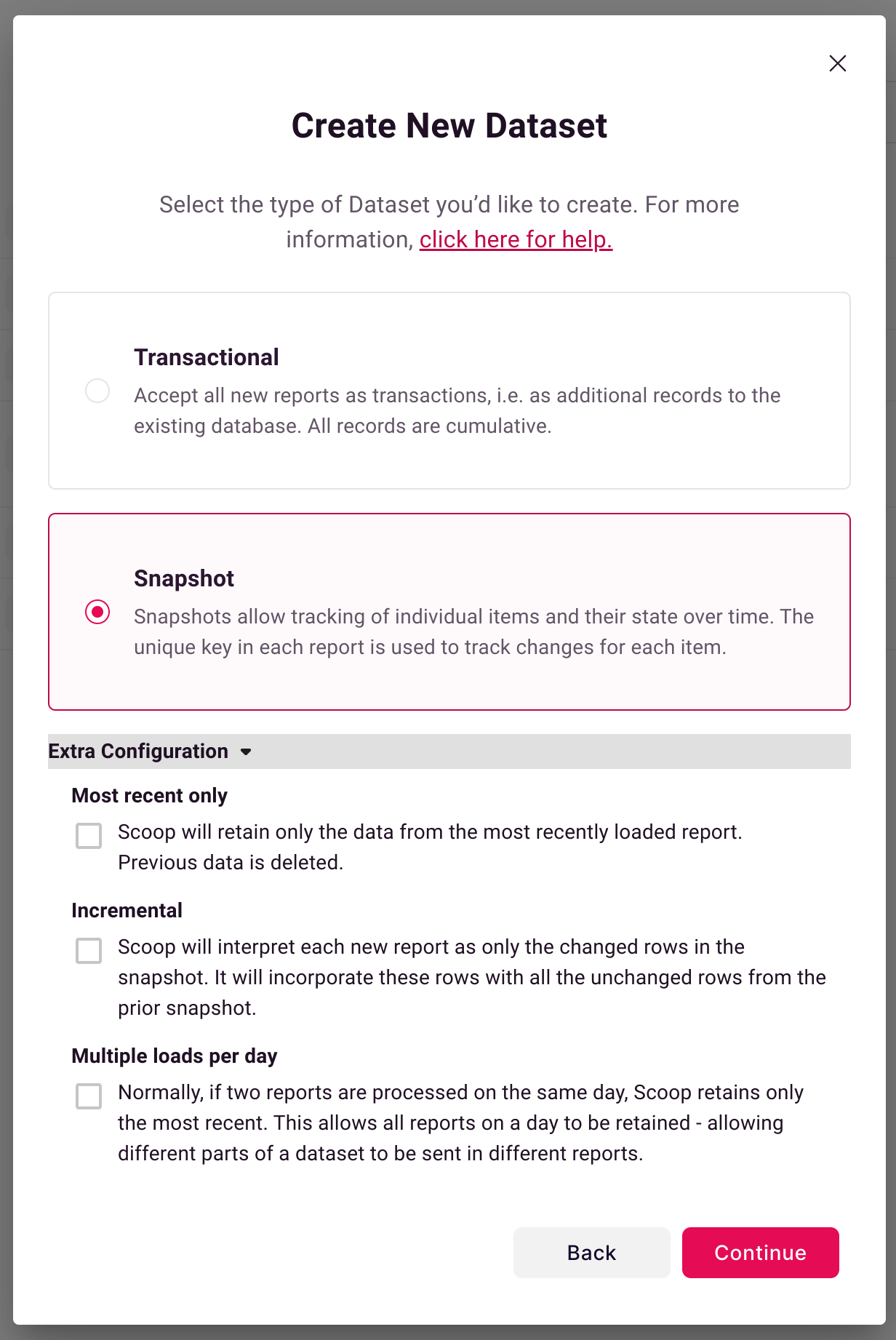

Accessing Advanced Options

Advanced options are in the Extra Configuration section at the bottom of the dataset setup screen:

Most Recent Only (keepOnlyCurrent)

When enabled, Scoop retains only the most recent data load, discarding all historical snapshots.

How It Works

| Setting | Behavior |

|---|---|

| Off (default) | Scoop keeps all historical snapshots, enabling time-based analysis |

| On | Only the latest load is retained; previous data is deleted |

When to Use

Enable this when:

- Your data is entirely contained in each report (complete refresh)

- You don't need historical comparisons

- Storage efficiency is a priority

- The data represents current state only (no historical value)

Keep disabled when:

- You want to track changes over time

- You need pipeline waterfall analysis

- Historical comparisons are valuable

- You're analyzing trends across snapshots

Example Use Cases

| Use Case | Setting | Reason |

|---|---|---|

| CRM Pipeline | Off | Need to see how pipeline changes |

| Product Catalog | On | Current state is all that matters |

| Sales Leaderboard | Off | Want to track rankings over time |

| Real-time Inventory | On | Only current counts are relevant |

Incremental Loading

For large datasets, incremental loading dramatically reduces processing time by loading only changed records.

How It Works

Instead of loading all records every day:

┌────────────────────────────────────────────────────────┐

│ Standard Load (Full Snapshot) │

│ Day 1: Load 100,000 records (all) │

│ Day 2: Load 100,000 records (all) │

│ Day 3: Load 100,000 records (all) │

│ → 300,000 records processed │

└────────────────────────────────────────────────────────┘

┌────────────────────────────────────────────────────────┐

│ Incremental Load (Changes Only) │

│ Day 1: Load 100,000 records (initial) │

│ Day 2: Load 500 records (changes only) │

│ Day 3: Load 750 records (changes only) │

│ → 101,250 records processed │

│ → Scoop reconstructs full snapshot automatically │

└────────────────────────────────────────────────────────┘Snapshot Reconstruction

When a record isn't in the incremental load:

- Scoop checks if the unique key existed previously

- If yes, it carries forward the last known values

- The complete snapshot is reconstructed automatically

Requirements

To use incremental loading:

| Requirement | Description |

|---|---|

| Unique Key | Dataset must have a unique identifier |

| Source Filter | Configure your source report to export only changed records |

| Change Detection | Source system must support "modified since" filtering |

Setting Up Incremental Loads

- Enable the Incremental checkbox in Extra Configuration

- Configure your source report to filter by last modified date

- Ensure the unique key column is included

- Test with a few loads to verify reconstruction works correctly

Performance Benefits

| Dataset Size | Full Load Time | Incremental Time | Improvement |

|---|---|---|---|

| 10,000 rows | 30 sec | 5 sec | 6x faster |

| 100,000 rows | 5 min | 30 sec | 10x faster |

| 1,000,000 rows | 45 min | 2 min | 22x faster |

Times are approximate and depend on record complexity and infrastructure.

Multiple Loads Per Day

Controls whether Scoop retains or replaces data when receiving multiple files on the same day.

Default Behavior (Disabled)

When you send multiple files on the same day:

- The latest file replaces the earlier one

- Only one snapshot per day is retained

- Useful for correcting mistakes or intra-day updates

Enabled Behavior

When Multiple Loads Per Day is enabled:

- All files received on the same day are retained

- Data accumulates within the day

- Each load is distinguishable by SCOOP_RSTI (record source timestamp ID)

When to Use

| Scenario | Setting | Example |

|---|---|---|

| Update corrections | Off | Re-send the day's data to fix errors |

| Accumulating events | On | Multiple event feeds throughout the day |

| Regional reports | On | Separate files from each region |

| Intra-day updates | Off | Refresh pipeline data multiple times daily |

Example: Regional Sales Reports

With Multiple Loads Per Day enabled:

9:00 AM: Load regional_sales_west.csv

11:00 AM: Load regional_sales_central.csv

2:00 PM: Load regional_sales_east.csv

Result: Dataset contains all three regions' data for todayCombining Options

These options can be combined for specific use cases:

| Combination | Use Case |

|---|---|

| Most Recent Only + Incremental | Efficient current-state tracking |

| Incremental + Multiple Loads | Large datasets with multiple sources |

| Most Recent Only + Multiple Loads | Current state from multiple feeds |

Best Practices

Choosing the Right Configuration

- Start with defaults — Most datasets work well with standard settings

- Enable incremental — When datasets exceed 50,000 rows and changes are small

- Enable Most Recent Only — Only when historical analysis isn't needed

- Enable Multiple Loads — Only when consolidating multiple sources

Testing Your Configuration

- Load a small test file first

- Verify data appears correctly

- Load a second file to test replacement/accumulation behavior

- Check that unique key handling works as expected

Monitoring Performance

Watch for these indicators:

- Load times increasing (consider incremental)

- Storage growing unexpectedly (check Most Recent Only setting)

- Missing data (verify Multiple Loads setting)

Troubleshooting

Data Not Accumulating

- Verify Multiple Loads Per Day is enabled

- Check that files have different data (duplicates may merge)

- Confirm files arrived on the same calendar day

Incremental Load Missing Records

- Verify source report includes all changed records

- Check unique key is correctly configured

- Ensure previous snapshot exists for reconstruction

Historical Data Disappearing

- Check Most Recent Only isn't enabled unintentionally

- Verify you're not sending replacement files

- Confirm snapshot retention policies

Related Topics

- Snapshot vs Transactional Datasets - Understanding dataset types

- Intelligent Date Handling - Working with dates

- Unique Keys - Configuring record identification

Updated 3 months ago